Databricks Certified Associate Developer for Apache Spark 3.5 – Python

Last Update Feb 21, 2026

Total Questions : 136 With Methodical Explanation

Why Choose CramTick

Last Update Feb 21, 2026

Total Questions : 136

Last Update Feb 21, 2026

Total Questions : 136

Customers Passed

Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5

Average Score In Real

Exam At Testing Centre

Questions came word by

word from this dump

Try a free demo of our Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 PDF and practice exam software before the purchase to get a closer look at practice questions and answers.

We provide up to 3 months of free after-purchase updates so that you get Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 practice questions of today and not yesterday.

We have a long list of satisfied customers from multiple countries. Our Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 practice questions will certainly assist you to get passing marks on the first attempt.

CramTick offers Databricks Databricks-Certified-Associate-Developer-for-Apache-Spark-3.5 PDF questions, and web-based and desktop practice tests that are consistently updated.

CramTick has a support team to answer your queries 24/7. Contact us if you face login issues, payment, and download issues. We will entertain you as soon as possible.

Thousands of customers passed the Databricks Databricks Certified Associate Developer for Apache Spark 3.5 – Python exam by using our product. We ensure that upon using our exam products, you are satisfied.

26 of 55.

A data scientist at an e-commerce company is working with user data obtained from its subscriber database and has stored the data in a DataFrame df_user.

Before further processing, the data scientist wants to create another DataFrame df_user_non_pii and store only the non-PII columns.

The PII columns in df_user are name, email, and birthdate.

Which code snippet can be used to meet this requirement?

A data scientist is analyzing a large dataset and has written a PySpark script that includes several transformations and actions on a DataFrame. The script ends with a collect() action to retrieve the results.

How does Apache Spark™'s execution hierarchy process the operations when the data scientist runs this script?

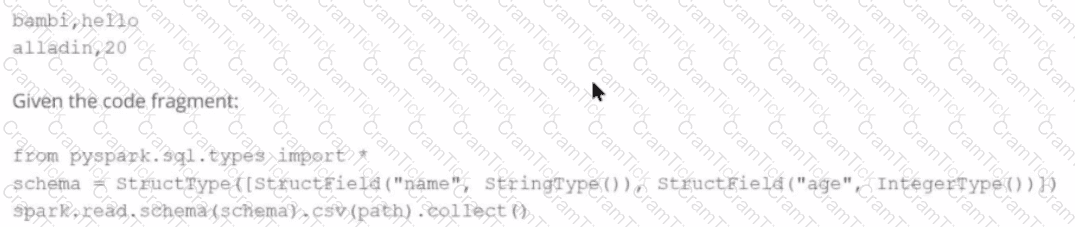

Given a CSV file with the content:

And the following code:

from pyspark.sql.types import *

schema = StructType([

StructField("name", StringType()),

StructField("age", IntegerType())

])

spark.read.schema(schema).csv(path).collect()

What is the resulting output?