SnowPro Advanced: Architect Certification Exam

Last Update Feb 21, 2026

Total Questions : 182 With Methodical Explanation

Why Choose CramTick

Last Update Feb 21, 2026

Total Questions : 182

Last Update Feb 21, 2026

Total Questions : 182

Customers Passed

Snowflake ARA-C01

Average Score In Real

Exam At Testing Centre

Questions came word by

word from this dump

Try a free demo of our Snowflake ARA-C01 PDF and practice exam software before the purchase to get a closer look at practice questions and answers.

We provide up to 3 months of free after-purchase updates so that you get Snowflake ARA-C01 practice questions of today and not yesterday.

We have a long list of satisfied customers from multiple countries. Our Snowflake ARA-C01 practice questions will certainly assist you to get passing marks on the first attempt.

CramTick offers Snowflake ARA-C01 PDF questions, and web-based and desktop practice tests that are consistently updated.

CramTick has a support team to answer your queries 24/7. Contact us if you face login issues, payment, and download issues. We will entertain you as soon as possible.

Thousands of customers passed the Snowflake SnowPro Advanced: Architect Certification Exam exam by using our product. We ensure that upon using our exam products, you are satisfied.

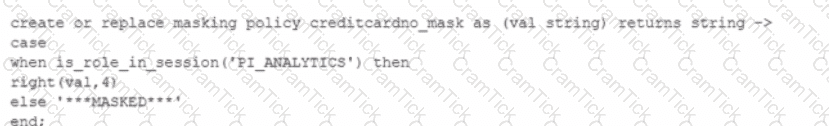

Consider the following scenario where a masking policy is applied on the CREDICARDND column of the CREDITCARDINFO table. The masking policy definition Is as follows:

Sample data for the CREDITCARDINFO table is as follows:

NAME EXPIRYDATE CREDITCARDNO

JOHN DOE 2022-07-23 4321 5678 9012 1234

if the Snowflake system rotes have not been granted any additional roles, what will be the result?

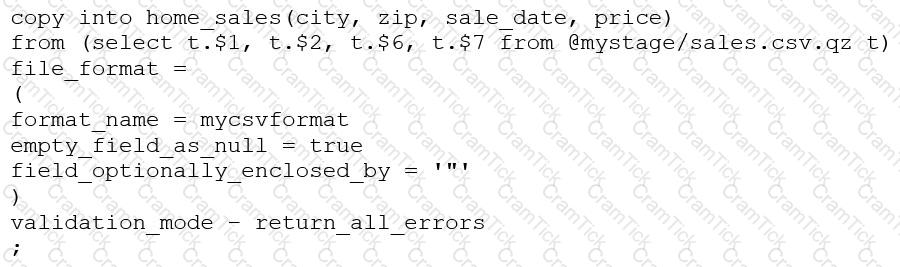

Consider the following COPY command which is loading data with CSV format into a Snowflake table from an internal stage through a data transformation query.

This command results in the following error:

SQL compilation error: invalid parameter 'validation_mode'

Assuming the syntax is correct, what is the cause of this error?