A container image scanner is set up on the cluster.

Given an incomplete configuration in the directory

/etc/kubernetes/confcontrol and a functional container image scanner with HTTPS endpoint https://test-server.local.8081/image_policy

1. Enable the admission plugin.

2. Validate the control configuration and change it to implicit deny.

Finally, test the configuration by deploying the pod having the image tag as latest.

Documentation Ingress, Service, NGINX Ingress Controller

You must connect to the correct host . Failure to do so may result in a zero score.

[candidate@base] $ ssh cks000032

Context

You must expose a web application using HTTPS routes.

Task

Create an Ingress resource named web in the prod namespace and configure it as follows:

. Route traffic for host web.k8s.local and all paths to the existing Service web

. Enable TLS termination using the existing Secret web-cert.

. Redirect HTTP requests to HTTPS .

You can test your Ingress configuration with the following command:

[candidate@cks000032]$ curl -L http://web.k8s.local

Context

You must fully integrate a container image scanner into the kubeadm provisioned cluster.

Task

Given an incomplete configuration located at /etc/kubernetes/bouncer and a functional container image scanner

with an HTTPS endpoint at https://smooth-yak.local/review, perform the following tasks to implement a validating admission controller.

First, re-configure the API server to enable all admission plugin(s) to support the provided AdmissionConfiguration.

Next, re-configure the ImagePolicyWebhook configuration to deny images on backend failure.

Next, complete the backend configuration to point to the container image scanner's endpoint at https://smooth-yak.local/review.

Finally, to test the configuration, deploy the test resource defined in /home/candidate/vulnerable.yaml which is using an image that should be denied.

You may delete and re-create the resource as often as needed.

The container image scanner's log file is located at /var/log/nginx/access_log.

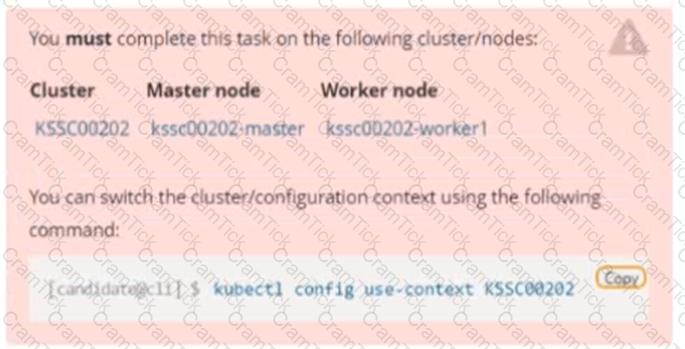

Context:

Cluster: gvisor

Master node: master1

Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context gvisor

Context: This cluster has been prepared to support runtime handler, runsc as well as traditional one.

Task:

Create a RuntimeClass named not-trusted using the prepared runtime handler names runsc.

Update all Pods in the namespace server to run on newruntime.

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context test-account

Task: Enable audit logs in the cluster.

To do so, enable the log backend, and ensure that:

1. logs are stored at /var/log/Kubernetes/logs.txt

2. log files are retained for 5 days

3. at maximum, a number of 10 old audit log files are retained

A basic policy is provided at /etc/Kubernetes/logpolicy/audit-policy.yaml. It only specifies what not to log.

Note: The base policy is located on the cluster's master node.

Edit and extend the basic policy to log:

1. Nodes changes at RequestResponse level

2. The request body of persistentvolumes changes in the namespace frontend

3. ConfigMap and Secret changes in all namespaces at the Metadata level

Also, add a catch-all rule to log all other requests at the Metadata level

Note: Don't forget to apply the modified policy.

Fix all issues via configuration and restart the affected components to ensure the new setting takes effect.

Fix all of the following violations that were found against the API server:-

a. Ensure that the RotateKubeletServerCertificate argument is set to true.

b. Ensure that the admission control plugin PodSecurityPolicy is set.

c. Ensure that the --kubelet-certificate-authority argument is set as appropriate.

Fix all of the following violations that were found against the Kubelet:-

a. Ensure the --anonymous-auth argument is set to false.

b. Ensure that the --authorization-mode argument is set to Webhook.

Fix all of the following violations that were found against the ETCD:-

a. Ensure that the --auto-tls argument is not set to true

b. Ensure that the --peer-auto-tls argument is not set to true

Hint: Take the use of Tool Kube-Bench

Context

A container image scanner is set up on the cluster, but it's not yet fully integrated into the cluster s configuration. When complete, the container image scanner shall scan for and reject the use of vulnerable images.

Task

Given an incomplete configuration in directory /etc/kubernetes/epconfig and a functional container image scanner with HTTPS endpoint https://wakanda.local:8081 /image_policy :

1. Enable the necessary plugins to create an image policy

2. Validate the control configuration and change it to an implicit deny

3. Edit the configuration to point to the provided HTTPS endpoint correctly

Finally, test if the configuration is working by trying to deploy the vulnerable resource /root/KSSC00202/vulnerable-resource.yml.

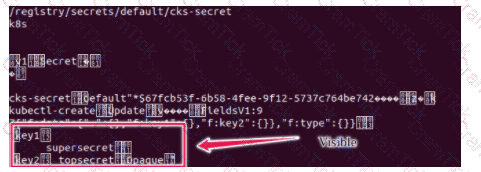

Secrets stored in the etcd is not secure at rest, you can use the etcdctl command utility to find the secret value

for e.g:-

ETCDCTL_API=3 etcdctl get /registry/secrets/default/cks-secret --cacert="ca.crt" --cert="server.crt" --key="server.key"

Output

Using the Encryption Configuration, Create the manifest, which secures the resource secrets using the provider AES-CBC and identity, to encrypt the secret-data at rest and ensure all secrets are encrypted with the new configuration.

On the Cluster worker node, enforce the prepared AppArmor profile

#include

profile nginx-deny flags=(attach_disconnected) {

#include

file,

# Deny all file writes.

deny /** w,

}

EOF'

Edit the prepared manifest file to include the AppArmor profile.

apiVersion: v1

kind: Pod

metadata:

name: apparmor-pod

spec:

containers:

- name: apparmor-pod

image: nginx

Finally, apply the manifests files and create the Pod specified on it.

Verify: Try to make a file inside the directory which is restricted.

Create a RuntimeClass named untrusted using the prepared runtime handler named runsc.

Create a Pods of image alpine:3.13.2 in the Namespace default to run on the gVisor runtime class.

Service is running on port 389 inside the system, find the process-id of the process, and stores the names of all the open-files inside the /candidate/KH77539/files.txt, and also delete the binary.

Documentation

Installing the Sidecar, PeerAuthentication, Deployments

You must connect to the correct host . Failure to do so may result in a zero score.

[candidate@base] $ ssh cks000041

Context

A microservices-based application using unencrypted Layer 4 (L4) transport must be secured with Istio.

Task

Perform the following tasks to secure an existing application's Layer 4 (L4) transport communication using Istio.

Istio is installed to secure Layer 4 (L4) communications.

You may use your browser to access Istio's documentation.

First, ensure that all Pods in the mtls namespace have the istio-proxy sidecar injected.

Next, configure mutual authentication in strict mode for all workloads in the mtls namespace.

use the Trivy to scan the following images,

1. amazonlinux:1

2. k8s.gcr.io/kube-controller-manager:v1.18.6

Look for images with HIGH or CRITICAL severity vulnerabilities and store the output of the same in /opt/trivy-vulnerable.txt

Cluster: qa-cluster

Master node: master Worker node: worker1

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context qa-cluster

Task:

Create a NetworkPolicy named restricted-policy to restrict access to Pod product running in namespace dev.

Only allow the following Pods to connect to Pod products-service:

1. Pods in the namespace qa

2. Pods with label environment: stage, in any namespace

Task

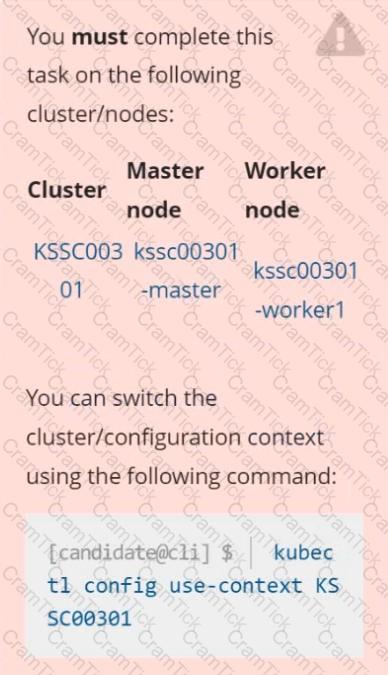

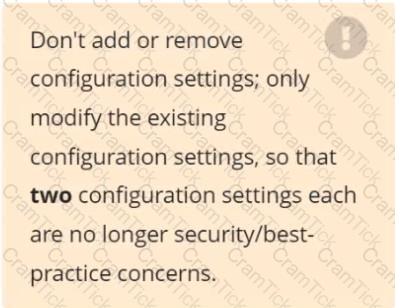

Analyze and edit the given Dockerfile /home/candidate/KSSC00301/Docker file (based on the ubuntu:16.04 image), fixing two instructions present in the file that are prominent security/best-practice issues.

Analyze and edit the given manifest file /home/candidate/KSSC00301/deployment.yaml, fixing two fields present in the file that are prominent security/best-practice issues.

You can switch the cluster/configuration context using the following command:

[desk@cli] $ kubectl config use-context stage

Context:

A PodSecurityPolicy shall prevent the creation of privileged Pods in a specific namespace.

Task:

1. Create a new PodSecurityPolcy named deny-policy, which prevents the creation of privileged Pods.

2. Create a new ClusterRole name deny-access-role, which uses the newly created PodSecurityPolicy deny-policy.

3. Create a new ServiceAccount named psd-denial-sa in the existing namespace development.

Finally, create a new ClusterRoleBindind named restrict-access-bind, which binds the newly created ClusterRole deny-access-role to the newly created ServiceAccount psp-denial-sa

Kubernetes Security Specialist | CKS Questions Answers | CKS Test Prep | Certified Kubernetes Security Specialist (CKS) Questions PDF | CKS Online Exam | CKS Practice Test | CKS PDF | CKS Test Questions | CKS Study Material | CKS Exam Preparation | CKS Valid Dumps | CKS Real Questions | Kubernetes Security Specialist CKS Exam Questions